Authors:

(1) Gao Yu Lee, School of Electrical and Electronic Engineering, Nanyang Technological University, 50 Nanyang Ave, 639798, Singapore ([email protected]);

(2) Tanmoy Dam, School of Mechanical and Aerospace Engineering, Nanyang Technological University, 65 Nanyang Drive, 637460, Singapore and Department of Computer Science, The University of New Orleans, New Orleans, 2000 Lakeshore Drive, LA 70148, USA ([email protected]);

(3) Md Meftahul Ferdaus, School of Electrical and Electronic Engineering, Nanyang Technological University, 50 Nanyang Ave, 639798, Singapore ([email protected]);

(4) Daniel Puiu Poenar, School of Electrical and Electronic Engineering, Nanyang Technological University, 50 Nanyang Ave, 639798, Singapore ([email protected]);

(5) Vu N. Duong, School of Mechanical and Aerospace Engineering, Nanyang Technological University, 65 Nanyang Drive, 637460, Singapore ([email protected]).

Table of Links

- Abstract and Introduction

- Backgrounds

- Type of remote sensing sensor data

- Benchmark remote sensing datasets for evaluating learning models

- Evaluation metrics for few-shot remote sensing

- Recent few-shot learning techniques in remote sensing

- Few-shot based object detection and segmentation in remote sensing

- Discussions

- Numerical experimentation of few-shot classification on UAV-based dataset

- Explainable AI (XAI) in Remote Sensing

- Conclusions and Future Directions

- Acknowledgements, Declarations, and References

Abstract

Recent advancements have significantly improved the efficiency and effectiveness of deep learning methods for image-based remote sensing tasks. However, the requirement for large amounts of labeled data can limit the applicability of deep neural networks to existing remote sensing datasets. To overcome this challenge, few-shot learning has emerged as a valuable approach for enabling learning with limited data. While previous research has evaluated the effectiveness of few-shot learning methods on satellite-based datasets, little attention has been paid to exploring the applications of these methods to datasets obtained from Unmanned Aerial Vehicles (UAVs), which are increasingly used in remote sensing studies. In this review, we provide an up-to-date overview of both existing and newly proposed few-shot classification techniques, along with appropriate datasets that are used for both satellite-based and UAV-based data. Our systematic approach demonstrates that few-shot learning can effectively adapt to the broader and more diverse perspectives that UAV-based platforms can provide. We also evaluate some state-of-the-art few-shot approaches on a UAV disaster scene classification dataset, yielding promising results. We emphasize the importance of integrating explainable AI (XAI) techniques like attention maps and prototype analysis to increase the transparency, accountability, and trustworthiness of few-shot models for remote sensing. Key challenges and future research directions are identified, including tailored few-shot methods for UAVs, extending to unseen tasks like segmentation, and developing optimized XAI techniques suited for few-shot remote sensing problems. This review aims to provide researchers and practitioners with an improved understanding of few-shot learning’s capabilities and limitations in remote sensing, while highlighting open problems to guide future progress in efficient, reliable, and interpretable few-shot methods.

1 Introduction

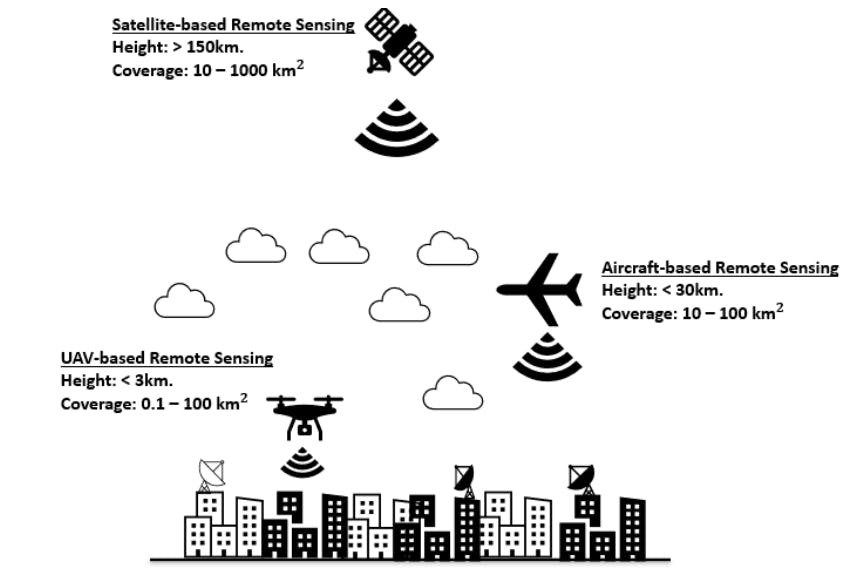

The last few decades saw significant advancements in remote sensing imaging technology. Remote sensing technologies nowadays encompass not only the traditional satellite-based platforms, but also include data collected from remote Unmanned Aerial Vehicles (UAVs). Figure 1 illustrates the typical height at which such platforms navigate as well as their estimated coverage area [1] for an urban setting. The modern airborne sensors that are attached to such platforms can cover and map a significant portion of the earth’s surface with better spatial and temporal resolutions, making them essential for earth-based or environmental-based observations like geodesy and disaster relief. Automatic analysis of remote sensing images is usually multi-modal, meaning that optical, radar, or infrared sensors could be used, and such data could be distributed geographically and globally in an increasingly efficient manner. With advances in artificial intelligence, deep learning approaches have found their way into the remote sensing community, which, together with the increased in remote sensing data availability, has enabled more effective scene understanding, object identification, and tracking.

Convolutional Neural Networks (CNNs) have become popular in object recognition, detection, and semantic or instance segmentation of remote sensing images, typically using RGB images as input, which undergo convolution, normalization, and pooling operations. The convolution operation is effective in accounting for the local interactions between features of a pixel. While the remote sensing community has made great strides in multi-spectral satellite-based image classification, tracking, and semantic and instance segmentation, the limited receptive field of CNNs makes it difficult to model long-range dependencies in an image. Vision transformers (ViTs) was proposed to address this issue by leveraging the self-attention mechanism to capture global interactions between different parts of a sequence. ViTs have demonstrated high performance on benchmark datasets, competing with the best CNN-based methods. Consequently, the remote sensing community has rapidly proposed

![Fig. 1 A simple pictorial illustration of the type of remote sensing platforms, as well as the typical height in which such platforms navigate. The values for their possible coverage area for an urban setting are also illustrated and are adapted from [1].](https://cdn.hackernoon.com/images/null-mx0346k.png)

ViT-based methods for classifying high-resolution images. With pre-training weights and transfer learning techniques, CNNs and ViTs can retain their classification performance at a lower computational cost, which is essential for limited computational resources platforms such as UAVs.

However, both CNNs and ViTs required large training data samples for accurate classification, and some of these methods may not be feasible for critical tasks such as UAVs search-and-rescue. It would be beneficial, for instance, if the platforms were able to quickly identify and generalize disaster scene solely from analyzing a small subset of the captured frames. Few-shot classification approaches addressed the above needs, and in such approaches the goal is to enable the network to quickly generalize to unseen test classes in a more diverse manner given a small sets of training images. A framework like this closely resembles how the human brain learns in real life. Like ViTs, few-shot learning has also ignited new researches in remote sensing, and their applications to land cover classification in the RGB domain ([2],[3]) and hyperspectral classification ([4], [5]) has been observed. The approaches have also been extended to object detection [6] and segmentation [7]. These emerging works are also as recent as that utilizing ViT. Since a review of ViT approaches for various domains in remote sensing [8] have been reported, a review of few-shot-based approaches in remote sensing is noteworthy to keep current interested researchers up-to-pace with the recent progresses in this area.

We have taken note that a related review has already been conducted in [9]. A notable omission in the previous review is the failure to acknowledge the significance of interpretable machine learning models in this field. Integrating interpretable machine learning into remote sensing image classification can further enhance CNNs and ViTs’ performance. By providing insights into the decision-making process of these models, interpretable machine learning can increase their transparency and accountability, which is particularly relevant in applications where high-stakes decisions are made based on their outputs, such as disaster response and environmental monitoring. For instance, saliency maps can be generated to highlight regions of images that are most relevant for the model’s decision, providing visual explanations for its predictions. Furthermore, interpretable machine learning can aid in identifying potential biases and errors in the training data, as well as enhancing the robustness and generalization of the model. In remote sensing, interpretable machine learning can also facilitate the integration of expert knowledge into the model, enabling the inclusion of physical and environmental constraints in the classification process. This can enhance the accuracy and interpretability of the model, allowing for more informed decision-making. In short, the integration of interpretable machine learning in remote sensing image classification can provide a valuable tool for enhancing the transparency, accountability, and accuracy of CNNs and ViTs. By providing insights into the decision-making process of these models, interpretable machine learning can help build trust in their outputs and facilitate their use in critical applications.

The purpose of this additional review in this area is to address some more gaps that were not included in the previous review by [9]. These gaps are as follows:

• Their focus in exploring remote sensing datasets was on satellite-based imagery and the few-shot learning techniques associated with such datasets. Nonetheless, with the emergence of UAV-based remote sensing datasets, we have detected a lack of consideration for the proposed works that have been applied and evaluated in such datasets. Furthermore, datasets and learning-based techniques associated with UAVs could also benefit from fewshot learning approaches due to their limited computational resources. This implies that data collected through UAVs would be constrained by a limited amount, thus emphasizing the need for efficient learning methods.

• Quantitatively speaking, satellite-based remote sensing datasets offer a considerably wider field of view and greater coverage, allowing for the simultaneous capture of multiple object classes or labels in a single scene, an approach referred to as multi-label classification. On the other hand, the smaller coverage area of UAV-based remote sensing datasets often provides data that is suitable only for single-label image classification. Consequently, proposed methods that address such settings in the context of UAV-based remote sensing can be easily distinguished from those designed for multilabel classification, in contrast to works utilizing satellite-based remote sensing datasets. It is essential, therefore, to take into account the characteristics of the remote sensing dataset when devising and evaluating image classification methods in this field.

• As has been emphasized and illustrated by [9], the utilization of few-shot learning-based techniques for remote sensing has been on the rise since 2012. As the aforementioned work was published in 2021, we can envisage that there will be an even greater proliferation of such approaches for remote sensing. In light of the dynamic nature of this research domain, our review aims to disseminate the most current and up-to-date information available on the topic. Through this approach, we seek to provide an improved understanding of the recent advances in few-shot learning-based methods for remote sensing, allowing for a comprehensive assessment of their potential applications and limitations.

In summary, our main contributions in this review article are as follows:

• In this work, we present and holistically summarize the applications of few-shot learning-based approaches in both satellite-based and UAV-based remote sensing images, focusing on image classification alone, but extending the review work conducted by [9] in terms of the explored remote sensing datasets. Our analysis serves to assist readers and researchers alike, allowing them to bridge gaps between current state-of-the-art image-based classification techniques in remote sensing, which may aid in promoting further progress in the field.

• As part of our discussion on the recent progress in the field of remote sensing regarding few-shot classification, we examined how CNNs and transformerbased approaches can be adapted to datasets, expanding the potential of these methods in this domain.

• Our work delved into a thorough discussion of the challenges and research directions concerning few-shot learning in remote sensing. We aimed to identify the feasibility and effectiveness of different learning approaches in this field, focusing on their potential applications in UAV-based classification datasets. Through this approach, we sought to shed light on the potential limitations and further research needed to improve the efficacy of few-shot learning-based techniques in the domain of remote sensing, paving the way for more advanced and sophisticated classification methods to be developed in the future.

• We also emphasized the significance of integrating XAI to improve transparency and reliability of few-shot learning-based techniques in remote sensing. Our objective was to offer researchers and practitioners a better comprehension of the possible applications and constraints of these techniques. We also aimed to identify novel research directions to devise more effective and interpretable few-shot learning-based methods for image classification in remote sensing.

The remainder of this paper is structured as follows: In Section 2, we provide a quick background on few-shot learning and present example networks. Section 3 discusses related review works in remote sensing, and Section 4, 5 and 6 provide brief highlights of the type of remote sensing data, common evaluation metrics utilized, and benchmark datasets commonly used, respectively.

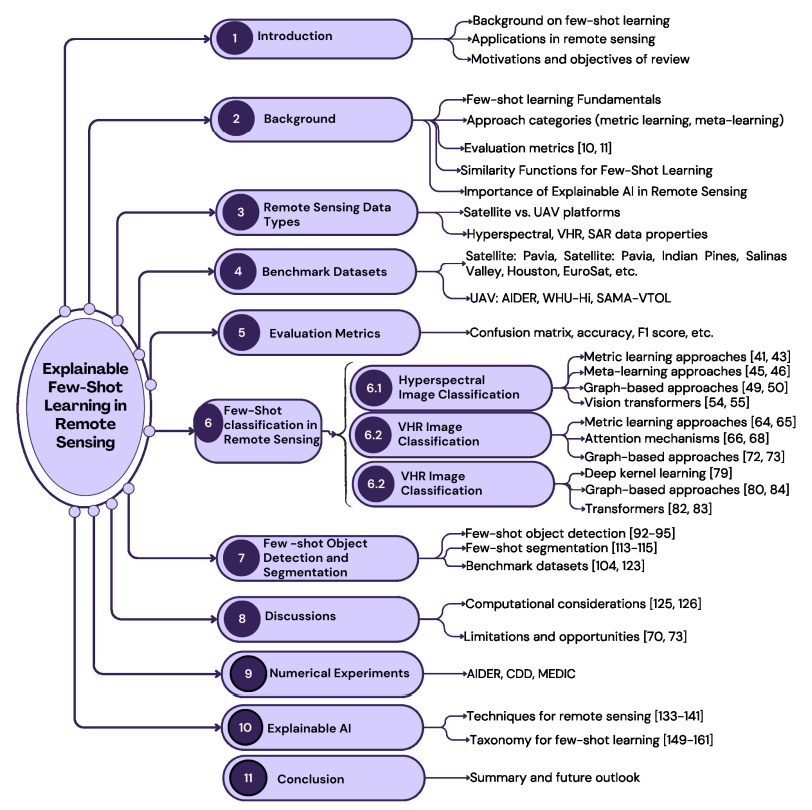

Section 7 delves into some up-to-date existing works on few-shot classification in the hyperspectral, Very High Resolution (VHR), and Synthetic Aperture Radar (SAR) data domain. In Section 8, we outline some implications and limitations of current approaches, and in Section 9, we quantitatively evaluate some existing methods on a UAV-based dataset, demonstrating the feasibility of such approaches for UAV applications. Finally, in Section 10, we conclude this review paper. An overview of the scope covered in our review of Explainable Few-Shot Learning for Remote Sensing is illustrated in Figure 2.

This paper is